I was immediately skeptical that any scalar mathematical measure could quantify consciousness, as I'm sure anyone not well versed in IIT can empathize with, but it seemed like a lot of people, with a lot more experience than me, were totally on board with Phi as the answer to the hard problem of consciousness (Max Tegmark, for example). Thus, I put my initial doubts aside and decided I had to dig through the details of the theory before I could assess its validity.

Early on, I was captivated by the spirit of IIT. My biggest concern was how one can go from math to consciousness, and IIT speaks directly to this. The basic idea is that if you want to measure consciousness, you have to start with a phenomenological understanding of "what it is like" to be conscious and, from this, "derive" the properties of physical systems that can instantiate this phenomenology. For example, our left and right visual field are experienced as a single "unified whole" which means information from both eyes must be shared at some point to account for this experience of a unified visual field. Based strictly on this idea, one can posit that physical systems that lack the ability to exchange information necessarily lack consciousness (or take part in two isolated conscious experiences) as the ability to physically "integrate information" seems necessary in order to generate the phenomenal experience of a unified whole. And, in this way, the mathematical formalism of IIT is built.

Mathematical Problems with IIT

In fact, I have a better reason to doubt this, as it was a little known fact at the time that if you do try to calculate Phi for even the simplest possible system (e.g. an AND and an OR gate connected to each other) you will find that it's impossible. The reason for this is that the axioms of IIT do not address what to do in the event that there are degenerate local phi values. In particular, the exclusion axiom states that as part of the optimization process, you must choose the lowest phi value as the "core cause" (another bit of vocab) for a given "mechanism". But, if there are two different core causes with the same phi value (an extremely common occurrence) then IIT does not specify which core cause to choose and your final results are extremely sensitive to this choice.

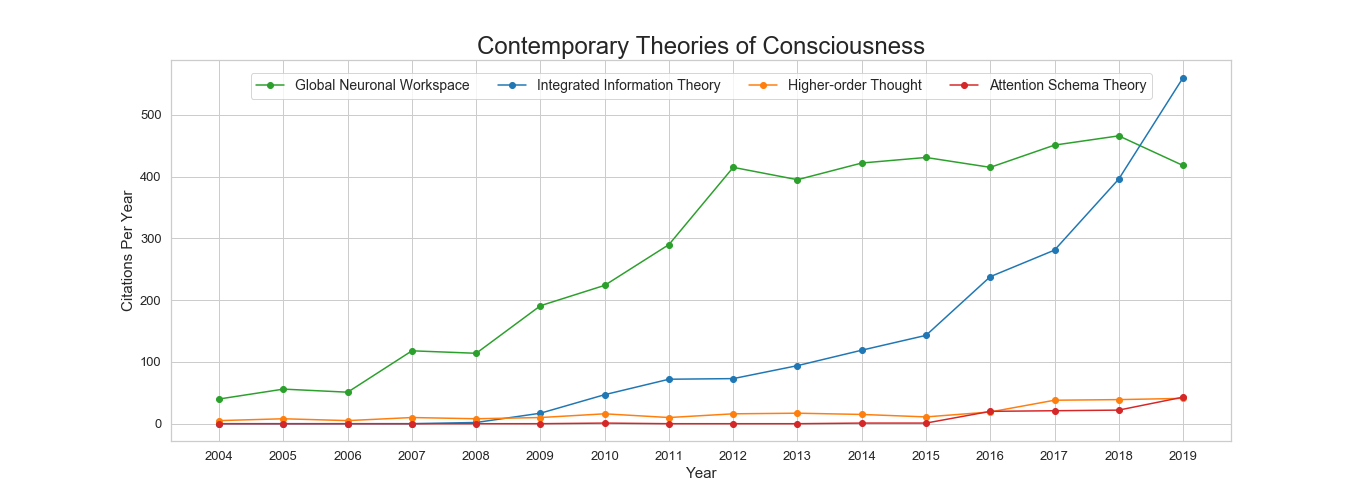

At this point, I started to have serious doubts about the validity of IIT. Here we are, twenty years into a proposed theory of consciousness based on a mathematical measure called Phi that isn't even defined! I was blown away by the fact that IIT was so popular, yet no one talked about the fact Phi isn't unique and couldn't actually be calculated. I started to believe that perhaps all the rhetoric surrounding Phi was responsible for its popularity and that the actual physical underpinnings were nonexistent. In other words, I started to think this whole theory might have nothing to do with reality. Given that this is the most popular theory of consciousness in contemporary neuroscience and has been growing exponentially over the last two decades (Figure 2), this was not a heartening thought for a graduate student with no first-author papers to have, especially under a tight deadline.

Epistemological Problems with IIT

I went about constructing simple examples to explore this idea, proving that it is always possible to fix the (outward) function of a system while changing its (internal) Phi value. Thus, Phi is completely decoupled from function. It had now been another six months since starting this project, and I thought this would have to be good enough for a paper. Having shown that Phi has nothing to do with input-output behavior, it seemed to me that whatever Phi claims to be measuring, it can't be justified in terms of subjective experience, as one must simply assume that a difference in subjective experience exists in absence of additional functional consequences - an assumption that can't be tested.

I started writing up these results but was plagued by the fact that IIT explicitly addresses the existence of functionally identical systems with different Phi values as part of the 2014 formulation of IIT 3.0. In other words, proponents of IIT were well aware of the fact that philosophical zombies could exist and were somehow completely OK with the idea that what justified the difference in subjective experience (measured by Phi) was Phi itself. Thus, it seemed my contribution was perhaps an interesting way of constructing these systems but something proponents of IIT would easily brush off as inconsequential, as they openly admit that the theory embraces such systems. The deeper issue at hand was one of epistemic justification. How is it that proponents of IIT could justify that what Phi is measuring is in fact consciousness? In the absence of functional differences, how can one say that a zombie system lacks phenomenal properties such as a "unified experience" without simply assuming it to be so? It seemed that the rhetoric being used to justify Phi as a measure of consciousness was grounded entirely in input-output behavior but, if the input-output behavior was fixed, Phi became its own justification scheme in that it was used as both a means and an end.

There was no place this problem was more readily apparent than experiments designed to falsify IIT in a laboratory setting. According to its proponents, if Phi was shown to increase in response to behavioral states we commonly associate with lower levels of subjective experience (e.g. sleep) then the theory was falsified. Yet, the logical validity of this entire argument is based on the premise that outward behavior is an accurate reflection of internal subjective experience. In other words, for this experiment to validate/falsify IIT one must believe the assumption that when a system appears to be asleep it objectively has a lower subjective experience than when a system is awake - the same assumption that proponents of IIT reject in defense of philosophical zombies! Thus, it seems proponents of IIT wanted to have their cake and eat it too. Experimental falsification was one of the reasons for IIT's meteoric rise to fame and, indeed, multimillion dollar efforts are still underway to test IIT in a laboratory setting. Yet, the Krohn-Rhodes theorem guarantees that whatever the results of these experiments are, it is possible that the opposite results exist, as what is being measured internally has nothing to do with the input-output behavior of the system. Thus, there is no reason to experimentally test IIT, as it is already falsified a priori...

Resolution

Generalizations of the unfolding argument quickly followed, in which the role of inference was clearly defined [Kleiner and Hoel, 2020]. Crucially, what is needed to test a theory of consciousness are results from an independent inference procedure, such as the inference that sleep is indicative of lower levels of subjective experience. This inference must be made independent of any theoretical framework and used as the benchmark to which predictions from a given theory are compared. If the prediction from the theory doesn't match the results from the inference procedure (e.g. the theory predicts high consciousness when asleep and low consciousness when awake) then the theory is falsified. Furthermore, if one assumes that independent inference procedures are based on input-output behavior such as sleep (an assumption that seems unavoidable) then the ability to vary the prediction from a theory of consciousness under fixed input-output automatically implies the theory is falsified as at least one of the predictions is logically guaranteed to disagree with the results from the inference procedure.

With these new results (formalization of unfolding and falsification) in hand, I was able to ground everything I had done in terms of these increasingly familiar formalisms. For example, I could now prove that the Krohn-Rhodes theorem falsifies any theory of consciousness that assumes feedback as a necessary condition, such as IIT. Thus, I was finally able to translate the intuition that motivated my original line of arguments into concrete mathematical proofs - solidifying to myself that IIT is indeed ill-fated.

On the Future of IIT

As a case study, however, IIT remains extremely interesting to me. How is it that the theory is so popular given that it is both quantitatively and qualitatively so poorly defined? Even in light of the unfolding argument and mathematical proofs of falsification, I don't see proponents of IIT giving it up any time soon. I have met many of them in person, and for whatever reason IIT seems to be a disproportionately large part of their identity - certainly much more so than any other theory I've come across. Of course, this is not unanimous, and I have found plenty of people on both sides of the debate willing to discuss IIT with an open mind, but there is certainly a core of proponents of IIT that talk of constellations of concepts in qualia space with an air of superiority that makes you feel like they must know something you don't in order to justify such a seemingly strong belief in their theory.

But, the math doesn't lie and I'm now convinced that this is some sort of social or psychological phenomenon in which the culture of IIT attracts believers for reasons that are anything but scientific - perhaps by promising an answer to one of the most difficult existential questions. While I don't personally like or approve of this approach to science, I can't help but recognize that one of the reasons we have come so far in formalizing the epistemic problems surrounding consciousness so quickly is due to the fact that seemingly solid arguments against IIT did nothing to detract from its fan base. Had I been the inventor of IIT, the existence of philosophical zombies would have been a deal-breaker for me, as there are strong logical indictments against any theory that admits them [e.g. Harnad 1995] and IIT is clearly not immune to these arguments. But IIT refused to believe these indictments and, in doing so, it forced stronger and stronger logical arguments out of those who dissent. Thus, IIT's inability to easily be thrown away insisted that the best possible arguments be brought forth against it which, in their own right, apply much more generally than IIT.

In light of this, I am curious to see what happens to IIT in the next five years. Ideally, I would hope to see an abrupt decline in the use of Phi and incline in the emphasis of problems associated with the epistemology of consciousness, signaling the acceptance of the unfolding argument. However, I am not naive about the institutional inertia behind this theory. Not only are millions of dollars being spent to test it in a lab, but dozens of graduate students, postdocs, and professors have devoted significant time to pushing this theory forward in some form or another. To accept the notion that one must go back to step one (framing the problem) after so many years of hard work is a difficult thing to do. This, in combination with the historical tendency for proponents of IIT to pivot rather than truly address contradictions in the theory, makes me think it is equally likely that Phi lives on under a different mathematical guise with equally fatal problems buried under a mountain of confusing jargon. If this is the case, I probably will not give IIT the benefit of the doubt again.

RSS Feed

RSS Feed